SkyPilot: How to run AI models and workloads easily on any infra and save money too

What the Heck is SkyPilot?

SkyPilot is like a universal remote control, but instead of flipping through channels of reality TV garbage, it manages your cloud infrastructure. Think Kubernetes clusters, cloud VMs, and even that dusty old server in your closet – all working together in a beautiful, chaotic symphony orchestrated for your AI workloads. It's designed to make your life easier, especially if you're dealing with the ever-growing demands of Artificial Intelligence. Because, let's face it, AI is hungry, and it needs a lot of compute power.

SkyPilot offers a simplified interface using three core concepts:

- Clusters: The basic building blocks. Think of them as groups of VMs or Kubernetes pods.

- Jobs: The actual programs you want to run. Like "train this ridiculously large language model."

- Services: For serving your AI models, because what's the point of having a fancy AI if no one can use it?

These abstractions cover the entire AI lifecycle, from initial development and training to finetuning, hyperparameter optimization, and serving. Basically, everything but making the coffee (though, with enough GPUs, you could probably train an AI to do that too).

Why Bother with SkyPilot? (Besides Avoiding Cloud-Induced Headaches)

SkyPilot has some sweet benefits that might just make you reconsider your current, probably overly complicated, setup:

Unified Execution (Because Juggling Clouds is for Clowns, Not Engineers)

No matter how many clouds, regions, or clusters you're dealing with, SkyPilot provides a single, unified interface. You tell it what you want to run, and it figures out the messy details of where and how. It's like having a really efficient, slightly sarcastic, personal assistant for your cloud infrastructure.

Cost and Capacity Optimization (Save Money, Buy More GPUs... or Pizza)

SkyPilot automatically picks the cheapest and most available infrastructure for your workload. It's like a bargain hunter for compute resources, constantly sniffing out the best deals. So you can spend less time worrying about your cloud bill and more time, you know, actually doing science (or whatever it is you do).

Auto-Failover (Because Stuff Happens, Especially in the Cloud)

You can give SkyPilot a list of infrastructure options, from "anywhere in the world" to "that specific server rack in Antarctica." If one option is unavailable (because, let's face it, cloud providers aren't perfect), SkyPilot automatically tries the next best choice. It's like having a backup plan for your backup plan, but without all the manual configuration.

No Cloud Lock-in (Freedom! ... Sort Of)

Adding new clouds, regions, or clusters later on? No problem. Your existing workloads can easily run on them without any major code changes or existential dread. SkyPilot embraces the Sky Computing vision, which basically means "play nice with all the clouds."

Digging Deeper: Clusters, Jobs, and Services

Let's break down those core concepts a bit further:

Clusters: Your Virtual Compute Playground

A cluster is SkyPilot's fundamental resource unit. It can be one or more VMs or Kubernetes pods, all hanging out in the same location. Think of it as your dedicated workspace for a particular task.

You launch a cluster using the sky launch command. Yes, it's that simple.

$ sky launch

$ sky launch --gpus L4:8

$ sky launch --num-nodes 10 --cpus 32+

$ sky launch --down cluster.yaml

$ sky launch --help # See all the glorious options.

Or, if you prefer Python:

import sky

task = sky.Task().set_resources(sky.Resources(accelerators='L4:8'))

sky.launch(task, cluster_name='my-cluster')

With a cluster, you can:

- SSH into any node (because sometimes you just need to get your hands dirty).

- Connect your favorite IDE (VSCode or whatever floats your boat).

- Submit and queue a bunch of jobs.

- Set it to automatically shut down to save those precious cloud dollars.

- Launch and manage many ephemeral clusters (because who needs permanence anyway?).

You can even bring your own Docker or VM image, or use SkyPilot's defaults, which are surprisingly sensible and handle things like CUDA versions for you.

Remember, a SkyPilot cluster is virtual. It's a collection of resources carved out from the physical clusters you provide (either Kubernetes clusters or your own machines).

For more info, check out the quickstart and dev-cluster documentation.

Jobs: Getting Stuff Done (Without the Manual Labor)

A job is simply the program you want to run. SkyPilot supports two main types:

- Jobs on Clusters: Use

sky exec. These jobs run on an existing cluster and reuse its setup. Good for interactive development and debugging. - Managed Jobs: Use

sky jobs launch. These jobs get their own temporary cluster and have auto-recovery. Perfect for those long-running, fault-tolerant tasks, especially on spot instances (which are like the clearance rack of cloud computing).

A job can contain one or more tasks, but usually, it's just one.

Jobs on Clusters: The Interactive Approach

sky exec is your friend for running jobs on an existing cluster.

sky exec my-cluster --gpus L4:1 --workdir=. -- python train.py

sky exec my-cluster train.yaml # YAML is your friend.

# Fractional GPUs? No problem!

sky exec my-cluster --gpus L4:0.5 -- python eval.py

# Multi-node? Sure, why not?

sky exec my-cluster --num-nodes 2 -- hostname

Or, in Python:

# Assuming 'my-cluster' is already up and running.

# Queue a job with 1 GPU.

train = sky.Task(run='python train.py').set_resources(

sky.Resources(accelerators='L4:1'))

train = sky.Task.from_yaml('train.yaml') # Load from YAML, because typing is hard.

sky.exec(train, cluster_name='my-cluster')

# Queue a job with half a GPU.

eval = sky.Task(run='python eval.py').set_resources(

sky.Resources(accelerators='L4:0.5'))

sky.exec(eval, cluster_name='my-cluster')

Check out the job-queue documentation for more details.

Managed Jobs: Set It and Forget It (Mostly)

Managed jobs handle the messy details of provisioning a temporary cluster and recovering from failures. They use a lightweight jobs controller for monitoring and recovery. sky jobs launch is the command you need.

These are great for running jobs on spot instances (save up to 6x on costs!), or for scaling to many parallel jobs.

The recommended workflow: develop and debug interactively with clusters, then use managed jobs for large-scale runs.

Services: Sharing Your AI Genius with the World

A service is for serving your AI models. It can have multiple replicas, spread across different locations, pricing models, or even GPU types. Because redundancy is good, and options are even better.

Check out sky-serve to get started.

Bringing Your Own Infrastructure (BYOI... Almost)

SkyPilot plays nicely with your existing infrastructure: clouds, Kubernetes clusters, or even those on-premise machines you've been meaning to clean. It uses each infrastructure's native authentication (cloud credentials, kubeconfig, SSH).

Cloud VMs: The Usual Suspects

SkyPilot can launch VMs on most major cloud providers. Run sky check to see if you're good to go.

See cloud-account-setup for details. You can even set up specific roles and permissions for SkyPilot, if you're into that sort of thing.

Kubernetes Clusters: Container Orchestration Goodness

Bring your existing Kubernetes clusters (managed or on-prem) into the SkyPilot fold. Auto-failover between multiple clusters is supported, because who needs single points of failure?

See kubernetes-overview.

Existing Machines: Dust Off Those Old Servers

Got a bunch of machines with IP addresses you can SSH into? Bring them into SkyPilot!

SkyPilot's Secret Sauce: Cost and Capacity Optimization

Whenever SkyPilot needs to provision resources, it automatically optimizes for cost and capacity. It's like a built-in accountant that's always looking for the best deal.

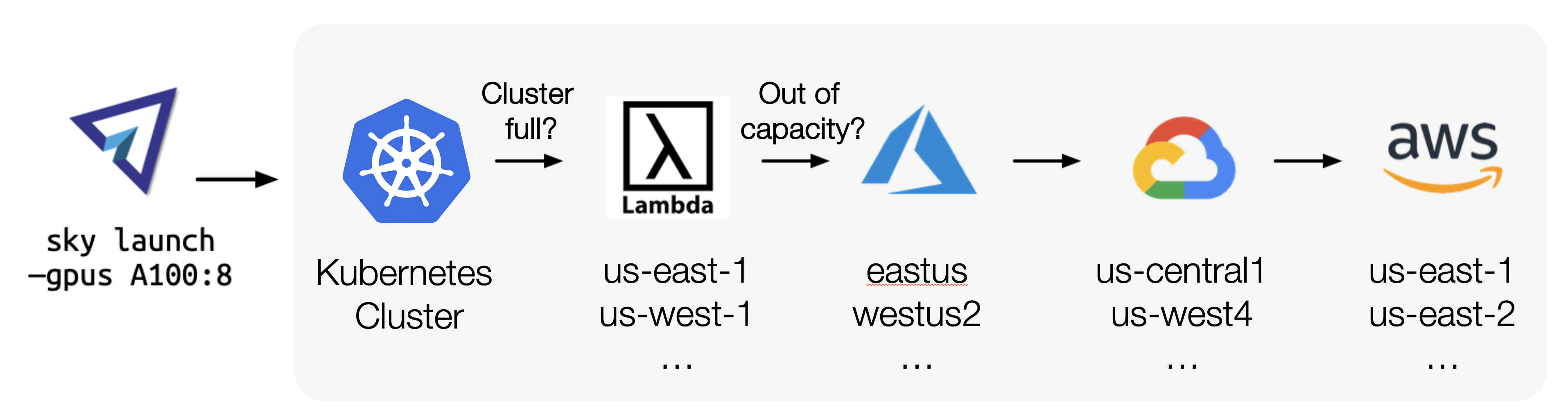

For example, if you need a cluster with 8 A100 GPUs, SkyPilot will try all the options in your specified search space, starting with the cheapest and most available, and automatically failing over if needed:

This means no more worrying about specific infrastructure details, manual retries, or complicated setups. You get more GPU capacity and save money. It's a win-win!

You can define the search space for each workload, making it as broad or specific as you like. Examples:

- "Use the cheapest GPU from this set:

{A10g:8, A10:8, L4:8, A100:8}" - "Use my Kubernetes cluster or any cloud I have access to"

- "Give me a spot or on-demand H100 GPU"

- "Only use AWS's European regions"

- "Use this specific zone, region, or cloud"

See auto-failover for the nitty-gritty details.

Local or Team Deployment: Choose Your Adventure

SkyPilot can be used locally or deployed as a centralized API server for your team. Team deployment lets you share and manage resources across multiple users:

- Deploy Once, Use Anywhere: Deploy the SkyPilot API server in Kubernetes or on a cloud VM and access it from anywhere.

- Resource Sharing: Team members can share resources, because sharing is caring (and efficient).

- Easy Onboarding: New members can run SkyPilot commands without setting up their own cloud credentials.

- Global View and Control: Admins get a single dashboard to monitor all the team's resources.

This article is an enhanced version of the original documentation, which can be found at SkyPilot Documentation.

Related content

Anthropic revela que tan solo 250 documentos maliciosos pueden crear backdoors en cualquier modelo

Un pequeño número de samples puede envenenar LLMs de cualquier tamaño Pensabas que los modelos de lenguaje (Large Language Models, LLMs), entrenados con petabytes de datos, eran "inmunes" a unas pocas "manzanas podridas"?. Bueno, te equivocabas. Un estudio reciente de Anthropic reveló una verdad incómoda: tan

Read the full post →

Researchers at Apple concludes that LLMs are basically glorified parrots: "It may resemble sophisticated pattern matching more than true logical reasoning"

The AI community is in a frenzy -as usual-, and no, it's not about the latest sentient toaster meme. Apple, in its infinite wisdom (and let's be honest, occasional need to stir the pot), has been working with dropped a research paper that has everyone questioning