SAPwned: Wiz Research revealed SAP AI vulnerabilities that leaked full control on cloud instances and private AI data

TL/DR

Grab your black hats, fellas: Wiz Research team has been poking around AI services to see if they're really as secure as they seem. They audited SAP AI Core, a PaaS which allows to develop, train and run AI managed, scalable services. Spoiler alert: They found some chinks in the armor. Think leaky logs, exposed files, and even a way to hijack the whole system! The good news? They told SAP about it, they patched things up faster than you can say "AI apocalypse," and your data is safe. But it's a good reminder that even with AI, you gotta double-check the locks.

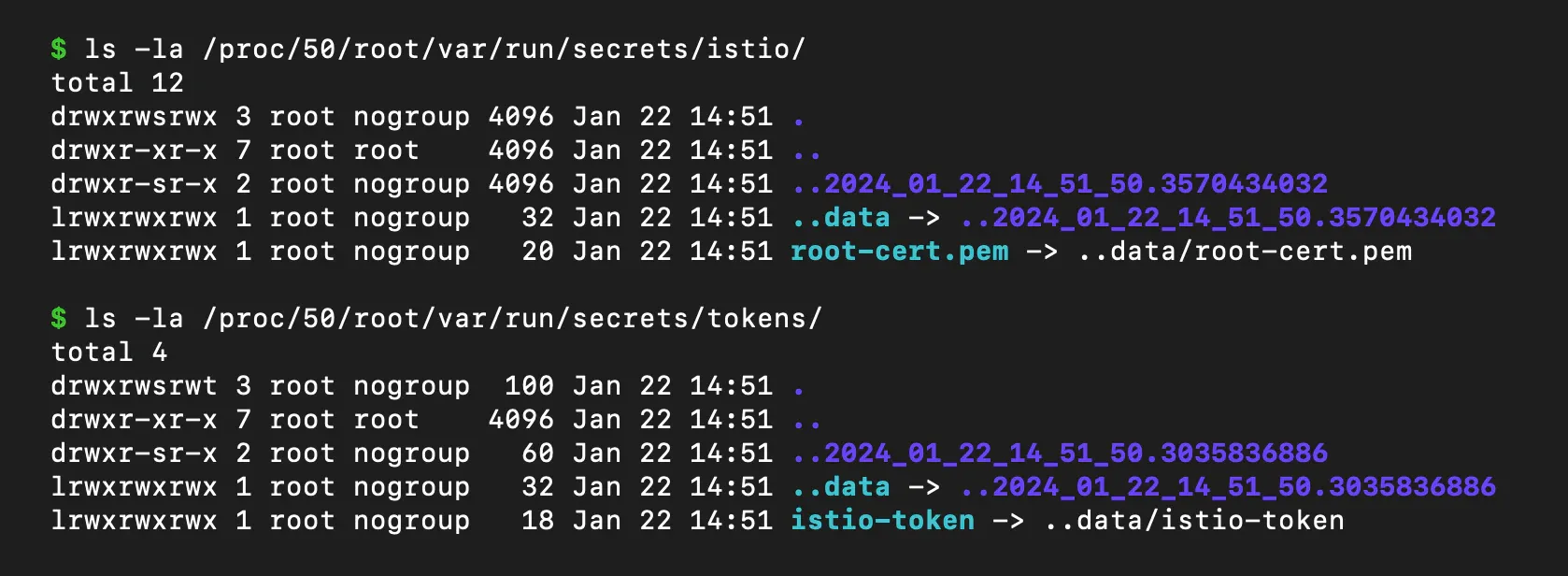

Istio and Kubernetes misconfigured: The real vulnerability

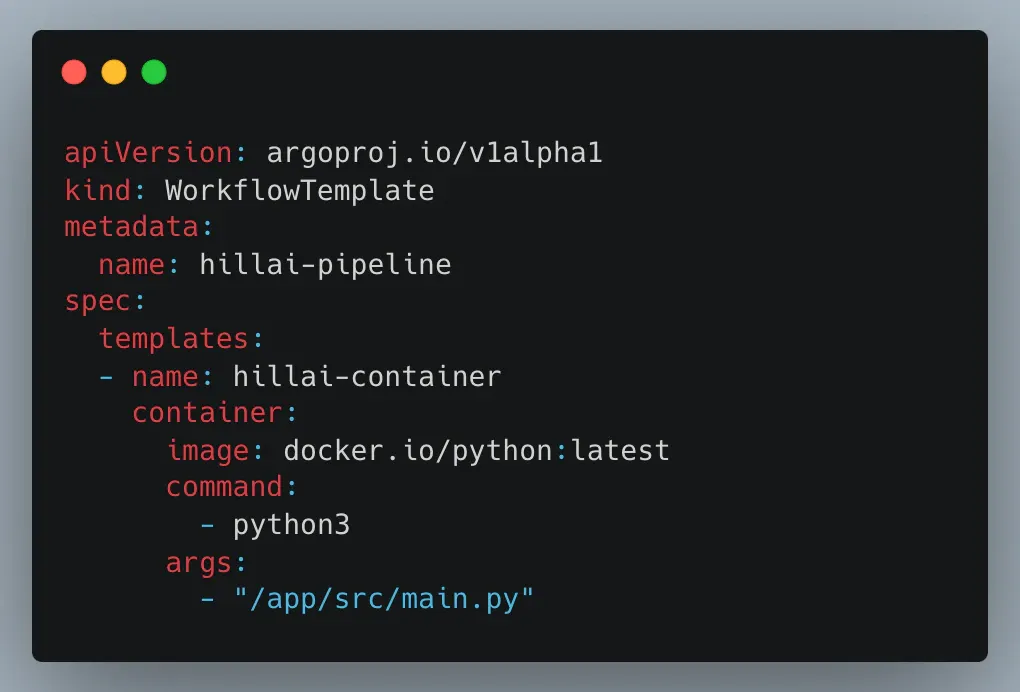

Remember those "choose your own adventure" books? Their journey into SAP AI Core started out pretty tame. They were like regular users, just setting up an AI project. But things got interesting when they realized they could run their own code inside their system. Not a bug, totally by design. But it's like giving everyone a screwdriver when they visit your spaceship - you never know who's going to start tinkering with the engine.

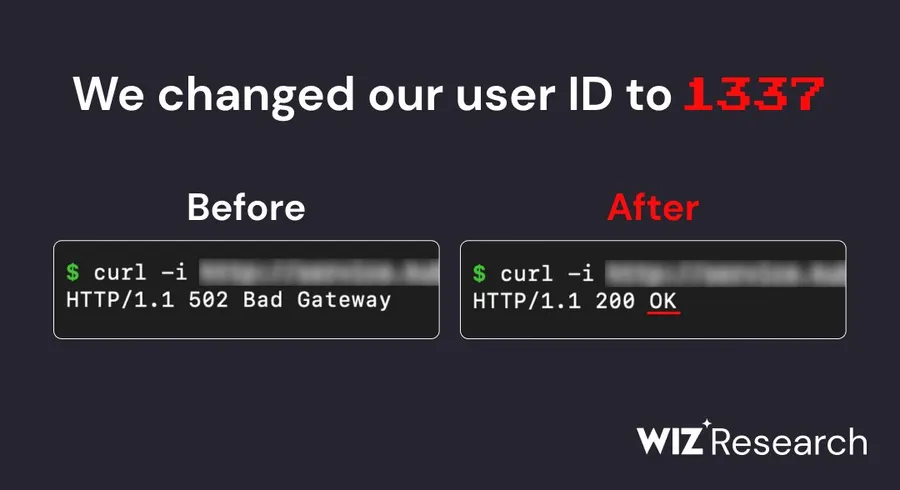

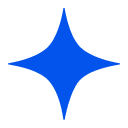

First hurdle? They had this Istio proxy locking down network access. But like any good hacker (they're the good guys, promise!), they found a loophole. Turns out, setting their user ID to "1337" (we see you, fellow nerds) was like getting a backstage pass to the whole network. From there, they uncovered a chain of vulnerabilities, like breadcrumbs leading them deeper into the system:

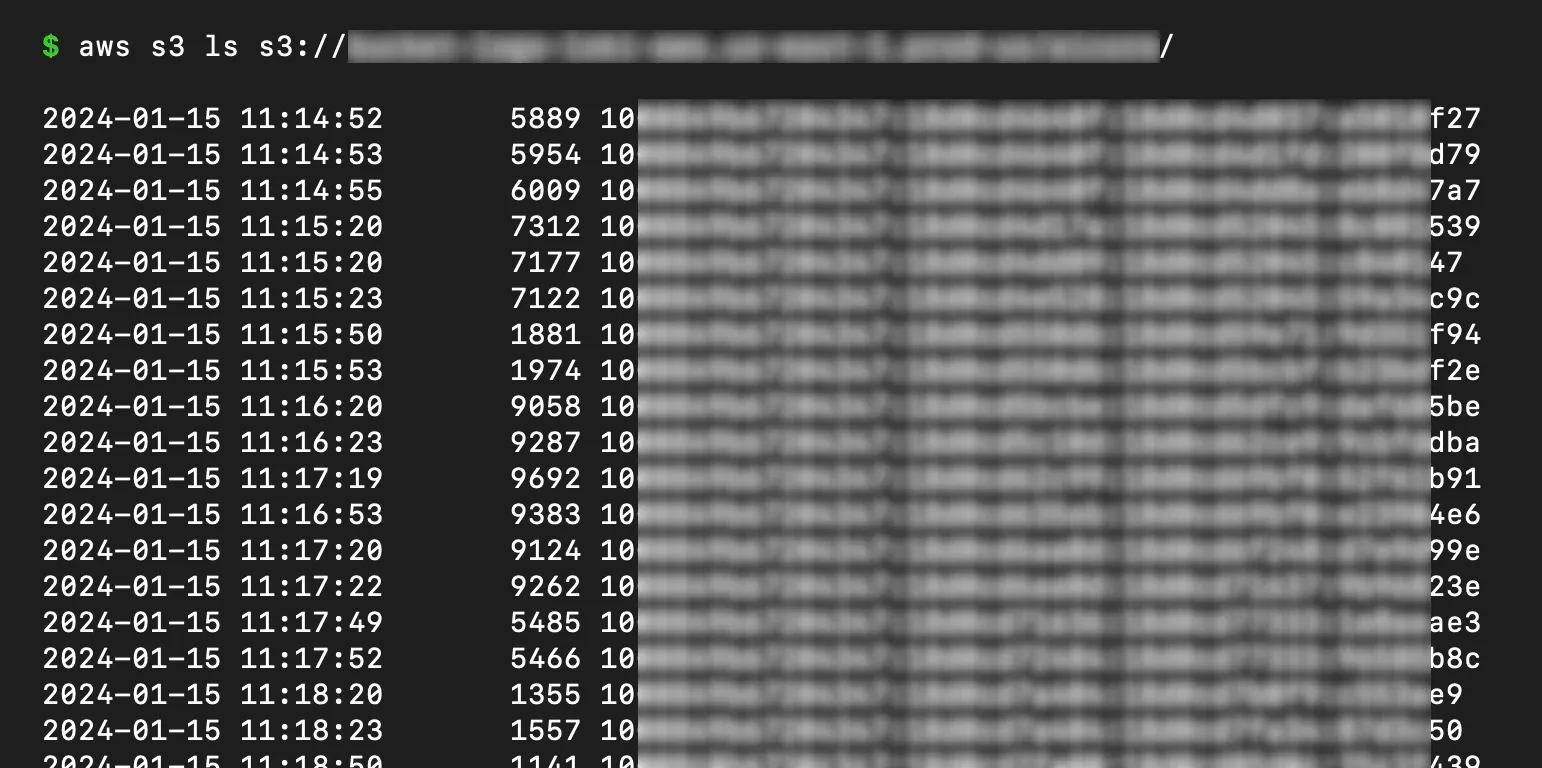

Leaking Loki

Their logging service was spilling AWS secrets like it was gossip hour. They're talking access keys to buckets full of logs – juicy stuff!

EFS Exposé

Imagine leaving your diary open in a public park. That's what they did with their Elastic File System, giving anyone on the network access to mountains of customer data. Oops!

Helm: More Like "Help Yourself"

Their Helm server (a tool for managing software packages) was the real MVP. It basically handed over the keys to the kingdom - they're talking access to their internal Docker registry, their Artifactory server, and even the ability to deploy code with full admin privileges. Talk about hitting the jackpot!

With great power comes, well, the responsibility to tell someone they left the back door open. They reported all of these vulnerabilities to SAP, and they've since battened down the hatches. No harm, no foul. But it highlights a crucial point: security in the age of AI is a whole new ballgame.

AI: Not Just a Buzzword, But a Security Challenge

Here's the thing about AI: it thrives on data, and lots of it. That data is often sensitive, making AI platforms a prime target for attackers. And because these platforms need to run arbitrary code for training models, things can get messy fast if proper isolation isn't in place.

Think of it like this: you wouldn't let strangers tinker with your house's electrical panel, right? That's kind of what's happening here. SAP's mistake wasn't in allowing user code (that's the whole point of AI platforms!), but in assuming their internal services were inherently safe just because they were behind a single security checkpoint.

Lessons Learned: Because Nobody Likes a Repeat Performance

So what's the takeaway from their little adventure?

- Defense in Depth: One layer of security is like a screen door on a submarine - it might look good, but it won't hold up under pressure. You need multiple layers, each protecting different parts of the system.

- Kubernetes Conundrums: Kubernetes (K8s) is the darling of the cloud world, but it can be tricky to secure. When multiple users share a K8s cluster, it's crucial to create strong boundaries between their workloads and the underlying infrastructure.

- AI Ain't Easy: AI development comes with its own set of security challenges. Platforms need to balance flexibility for users with robust safeguards to prevent malicious code from wreaking havoc. It's like juggling chainsaws while riding a unicycle – definitely not for amateurs!

The cloud is the Wild West, and we're the sheriffs trying to keep things from going full-on Deadwood. Stay tuned for more tales from the trenches as we continue to explore the ever-evolving world of cloud security!

Security Researchers and DevSecOps teams are a must

Big thanks to the intrepid minds at Wiz Research who made this investigation possible: Hillai Ben-Sasson (@hillai), Shir Tamari (@shirtamari), Nir Ohfeld (@nirohfeld), Sagi Tzadik (@sagitz_), and Ronen Shustin (@ronenshh). The role of offensive security researchers and devsecops teams is crucial in identifying and fixing vulnerabilities before they can be exploited by malicious actors. We salute you!

References

Related content

Vulnerabilidad crítica en MongoDB está siendo explotada activamente y permite que atacantes no autenticados se roben los datos: parcha ahora ya!

Si pensaste que el sufijo "Bleed" había pasado a mejor vida el 2014 con Heartbleed, MongoDB te trae noticias nostálgicas (y bien fomes, como decimos en Chile) para tu equipo de seguridad. Una vulnerabilidad crítica, ahora tristemente bautizada como MongoBleed (CVE-2025-14847), se está explotando brigidamente en el mundo

Read the full post →

Critical vulnerability in MongoDB is actively exploited and allows unauthenticated requests to steal data, patch now!

If you thought the "Bleed" suffix died in 2014 with Heartbleed, MongoDB has some nostalgic news for your security team. A critical vulnerability, now infamously dubbed MongoBleed (CVE-2025-14847), is currently being exploited in the wild. It allows unauthenticated attackers to treat your server's RAM like an